On Raft's Reconfiguration Bug

Below is a high level overview of the Raft reconfiguration bug cases laid out in Diego Ongaro’s group post, which described the problematic scenarios in Raft’s single server reconfiguration (i.e. membership change) algorithm. Configurations are annotated with their terms i.e., a config \(X\) in term \(t\) is shown as \(X^t\).

-

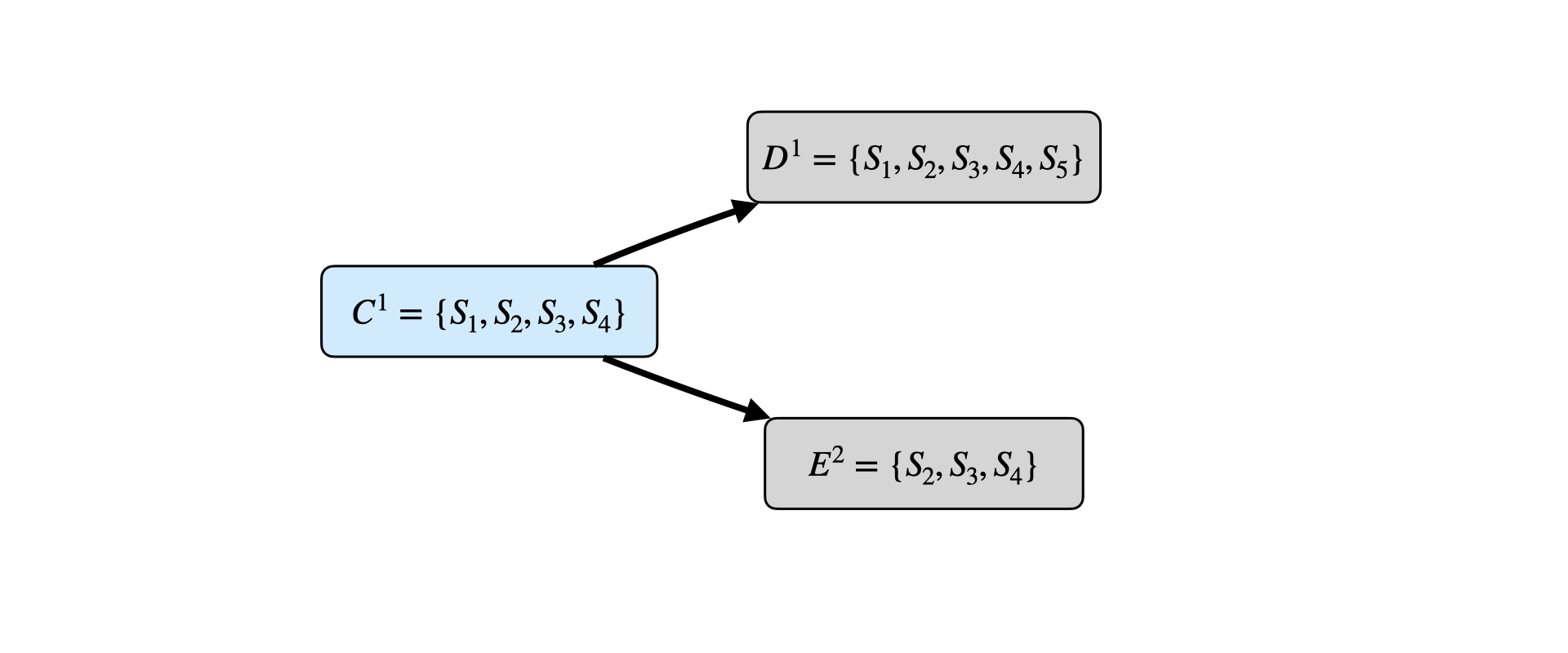

One add, one remove

-

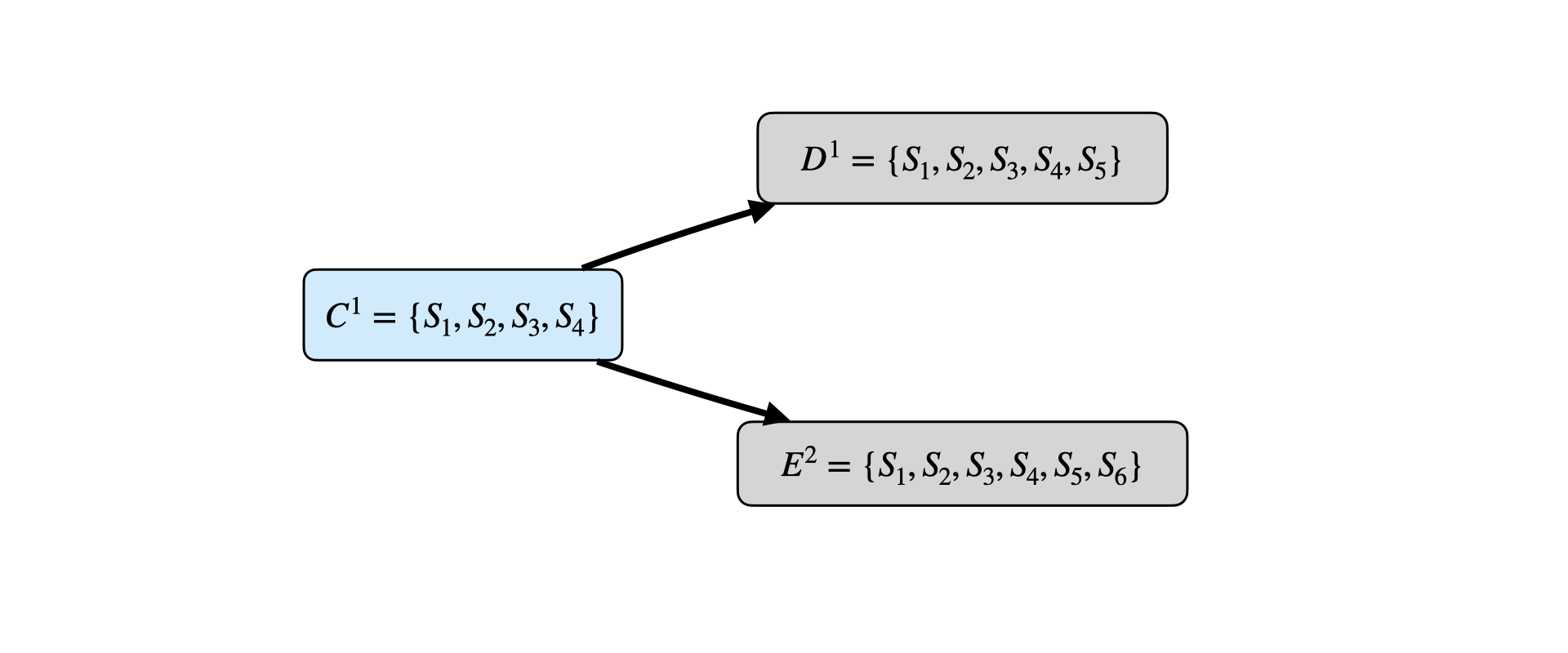

Two adds

-

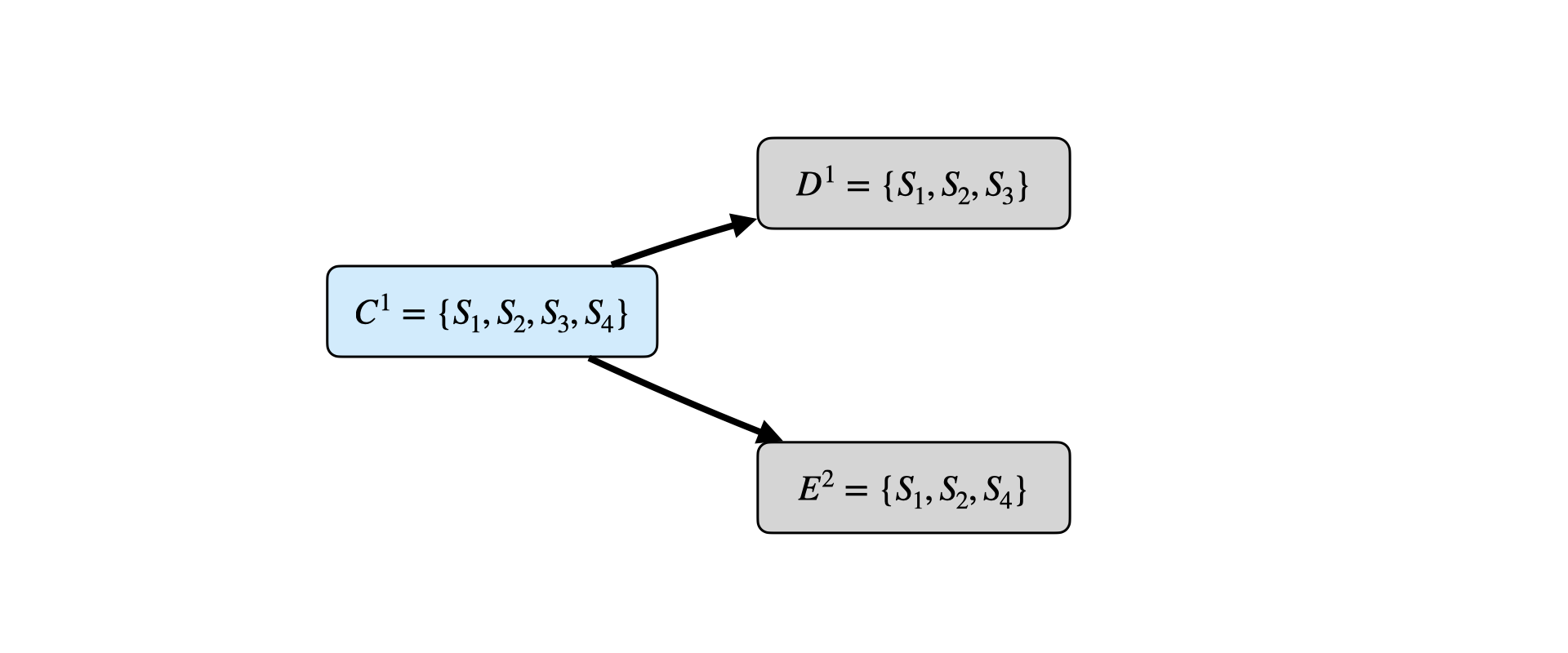

Two removes

I view all of these bug cases as instances of a common problem related to config management when logs diverge (i.e., when there are concurrent primaries in different terms). The bug arises in each case due to the fact that each child config (\(D\) and \(E\)) has quorum overlap with its parent \(C\) (due to the single node change condition), but the sibling configs don’t have quorum overlap with each other. These scenarios are problematic because, for example, in case (1), config \(D\) could potentially commit writes in term 1 that are not known to leaders in config \(E\) in term 2 or higher (since \(D\) and \(E\) don’t have quorum overlap), breaking the fundamental safety property that earlier committed entries are known to newer leaders.

As pointed out, this underlying problem should be avoided when using the joint consensus approach since in that case each child config will continue to contact a quorum in its parent config.

The Proposed Fix

Diego proposes the following fix:

The solution I’m proposing is exactly like the dissertation describes except that a leader may not append a new configuration entry until it has committed an entry from its current term.

As described above, the underlying bug can be seen as stemming from the fact that when log divergence occurs, even though each child config has quorum overlap with its parent (due to the single node change condition), the sibling configs do not necessarily have quorum overlap with each other.

So, upon election, before doing any reconfiguration, you actually need to be sure that any sibling configs are disabled i.e., prevented from committing writes, electing leaders, etc. You achieve this by committing a config in the parent config in your term, which disables all sibling configs in lower terms. I think this concept is clearer to see when thinking about the structure of global Raft system logs over time as a tree, similar to the concepts discussed here, and some of the formalization sketches here.

Similarly to Diego’s proposal, this fix is achieved in MongoDB’s reconfiguration protocol by rewriting the config term on primary election, which then requires this config in the new term to become committed before further reconfigs can occur on that elected primary.

A Note on Relaxing the Single Node Change Condition

The single node change condition (i.e. reconfigurations can only add or remove a single node) proposed in the original Raft dissertation is sufficient to ensure that all quorums overlap between any two configurations \(C_{old}\) and \(C_{new}\). Even without resorting to the joint consensus mechanism, though, this condition can be relaxed slightly, to permit additional, safe reconfigurations that are not allowed under the single node change rule.

Specifically, for a reconfiguration from \(C_{old}\) to \(C_{new}\), if we simply enforce that

\[QuorumsOverlap(C_{old}, C_{new})\]holds, where:

\[\begin{aligned} &Quorums(S) \triangleq \{s \in 2^{S} : |s| \cdot 2 > |S|\} \\ &QuorumsOverlap(S_i,S_j) \triangleq \forall q_i \in Quorums(S_i), q_j \in Quorums(S_j) : q_i \cap q_j \neq \emptyset\\ \end{aligned}\]then this explicitly ensures reconfiguration safety, without relying on the single node change restriction.

We can compare the generalized condition above with the single node change condition by observing the space of possible reconfigurations under each, for varying numbers of global servers. In the reconfiguration transition graphs below, blue edges represent single node change reconfigurations, and green edges represent reconfigurations that are possible under the generalized condition but not under the single node change condition. Note also that we always explicitly disallow starting in or moving to empty configs.

2 Servers

With only 2 servers, the single node change condition is equivalent to the generalized condition (note the absence of green edges):

3 Servers

Even with 3 servers the generalized condition admits more possible reconfigurations:

For example, moving between \(\{s_1,s_2\}\), \(\{s_2,s_3\}\), or \(\{s_1,s_3\}\) (i.e. any size 2 config) in one step is safe under the generalized condition, since quorums are of size 2 in both configs, which always intersect. These reconfigurations are not allowed under the single node change condition, though, since they require 1 add and 1 remove.

4 Servers

With 4 servers, even more additional reconfigurations are allowed.

In particular, note the ability to move from any 4-node config to any 2-node config in one step, since 4 node configs have quorums of size 3, which always intersect with the size 2 quorums of any 2 node config. Some 2 node configs can still also move directly between each other, even without a single node difference, as in the 3 server setting.