Cracking Go's PRNG For Fun and (Virtual) Profit

A friend recently built Mongobucks, a recreational Golang app that allowed users to exchange and gamble with a virtual currency. Every user starts with a fixed number of virtual tokens, and they could give or receive these tokens from other users. It also included a basic “gambling” feature. This feature would allow you to wager some amount of tokens, and then bet on the outcome of a virtual coin toss. Not long before this, I had listened to a story about Russian hackers who were able to crack casino slot machines by exploiting their weak random number generators, and I was curious if something similar would be possible here.

The application source code was publicly available, which allowed me to investigate the app internals. The gambling logic utilized Go’s math/rand package for the generation of virtual coin toss outcomes, which got my initial interest. Of course, any application concerned with security should be using a cryptographically secure random number generator, which Go provides as part of its crypto/rand package. As a personal challenge, though, I wanted to see how difficult it would be to crack Go’s default number generator and beat the app’s gambling system.

Application Architecture

The structure of the Mongobucks app was very simple. There was a single server that handled incoming requests to transfer money between users, or to execute new gambles. There was a backend database that stored user information and logged operations. Every gamble that occurred, and its outcome, would be saved, along with a timestamp of when that gamble occurred. Users could interact with the app via a Slack bot or a web interface. By scraping the public web API, it was possible to obtain a full, ordered list of all gambles that ever occurred. The application server used a single, global random number generator to simulate the virtual coin tosses, so my initial hypothesis was very simple: every gamble corresponds to one generation of the app’s internal random number generator. This formed the basis of my initial hacking approach: given a sequence of values generated by the internal random number generator, figure out a way to predict the next value that would be generated. This would allow me to predict the outcome of any gamble with perfect accuracy. In the rest of this post, I will refer to the sequence of gambles as a binary sequence, since each gamble, and corresponding random number generator output, is either a heads or a tails i.e. 0 or 1.

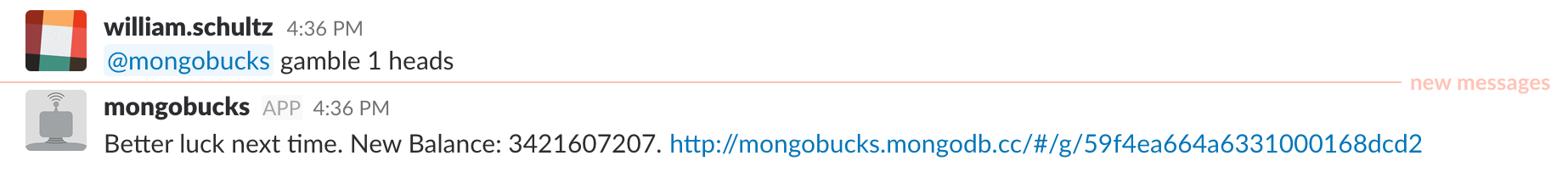

The Mongobucks Slack bot interface

The Mongobucks Slack bot interface

A Bad Seed

The interface to Go’s random number generator is provided in math/rand/rand.go. Go’s core pseudo-random number generator algorithm (math/rand/rng.go) is not too well documented but after some searching it appeared to be an implementation of a well known algorithm, the Additive Lagged Fibonacci Generator. This is a good article that describes some of the internal details of Go’s number generator.

A random number generator “source” is an instance of a random number generator with an encapsulated internal state. Different RNG sources can be advanced independently of each other without affecting the internal state of a different source. To seed a source, Go provides the following function

1

2

3

4

// Seed uses the provided seed value to initialize the generator to a

// deterministic state. Seed should not be called concurrently with any

// other Rand method.

func (r *Rand) Seed(seed int64)

which takes a 64-bit integer as its argument. Random number generator seeds are a way to initialize a generator source to some deterministic state. This means that that two separate sources, if initialized with the same seeds, will produce the same sequence of outputs. The rand.Seed function relies on another internal function, the Seed function in math/rand/rng.go, which executes the core seeding logic:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

// Seed uses the provided seed value to initialize the

// generator to a deterministic state.

func (rng *rngSource) Seed(seed int64) {

rng.tap = 0

rng.feed = _LEN - _TAP

seed = seed % _M

if seed < 0 {

seed += _M

}

if seed == 0 {

seed = 89482311

}

x := int32(seed)

for i := -20; i < _LEN; i++ {

x = seedrand(x)

if i >= 0 {

var u int64

u = int64(x) << 40

x = seedrand(x)

u ^= int64(x) << 20

x = seedrand(x)

u ^= int64(x)

u ^= rng_cooked[i]

rng.vec[i] = u

}

}

}

The details of the seed arithmetic aren’t that important, but there was one intriguing part. The underscored variables that appear in this function are constants defined at the top of the same file:

1

2

3

4

5

6

7

8

9

10

const (

_LEN = 607

_TAP = 273

_MAX = 1 << 63

_MASK = _MAX - 1

_A = 48271

_M = (1 << 31) - 1

_Q = 44488

_R = 3399

)

The value of _M looked suspicious i.e. line 6 of the above Seed function:

seed = seed % _MThe given seed argument is being taken modulo _M = (1 << 31) - 1 (\(2^{31} - 1\)). So, even though the seed argument is given as a 64-bit integer, only 31 bits of it are actually used. In other words, there are only \(2^{31} - 1\) unique seeds, since the seed argument undergoes the modulo operation before being used in any of the actual seeding logic. This seemed a bit odd. When I went back to look at the official Go documentation, I realized that this detail is, in fact, mentioned. It would be easy to miss, though, especially if you use the Seed function by just looking at its function signature. The Go documentation for math/rand.Seed reads:

Seed uses the provided seed value to initialize the default Source to

a deterministic state. If Seed is not called, the generator behaves

as if seeded by Seed(1). Seed values that have the same remainder

when divided by 2^31-1 generate the same pseudo-random sequence.

This finding inspired a new approach. With \(2^{64}\) seed possibilities, it had previously seemed infeasible to me to try any kind of brute force approach to find the original seed. With only \(2^{31}\) seed possibilities, though, it seemed more plausible.

Finding the Seed

If I could figure out the initial seed, then I would have a way to know the entire future sequence of gambles. The app server seeded the global random number generator once at startup, and generated random numbers for gambles based on that initial seed, until the server process was stopped and started again. As I mentioned above, I was able to compile an ordered sequence of all gambles that ever occurred. In the following sections I will refer to this global sequence of gambles as \(GambleSeq\).

If the server had been restarted a few times over the course of the app’s lifetime, there should be positions within \(GambleSeq\) where the sequence was reseeded. If I could find the most recent reseed point, and its seed, then I would be able to predict all future outputs of \(GambleSeq\) by continuing from the reseed point.

To find the reseed points within the sequence, I tried to use prefixes of random number sequences as a way to “fingerprint” a random sequence. Since there are only \(2^{31}-1\) unique seeds, there must be no more than \(2^{31}-1\) unique random number sequences. So, each sequence should have a unique 31-bit prefix.

Even if there were prefix collisions between sequences (which I deemed very unlikely), the sequences with colliding prefixes could easily be distinguished by looking at a longer sequence prefix. With this in mind, I created a lookup table that mapped length 31 sequence prefixes to the seeds that produced them. The final table looked something like the following:

| Sequence Prefix | Seed |

|---|---|

| 0111000101100000010101000011100 | 0 |

| 0010011101101001110100010101101 | 1 |

| 0011001010100110100000001101101 | 2 |

| ... | ... |

| 0110110010100000110000000001011 | 2147483647 |

Each key is a 31-bit value, and each value is a 64-bit seed. For simplicity, both were stored as 64-bit values, so each key-value pair occupied around 16 bytes (8 bytes for each 64-bit integer). There are \(2^{31}-1\) unique seeds, so the whole table took up approximately:

\[2^{31} \; \text{seeds} * 16 \; \text{bytes/entry} = 34,359,738,368 \; \text{bytes} \approx 32 \; \text{GB}\]I generated the lookup table using a Go script that inserted the key value pairs as documents in a MongoDB database. It took around an hour to generate on a 12-core (Intel i7-4930K CPU @ 3.40GHz) Ubuntu Linux workstation.

This lookup table gave me the ability to efficiently find the seed for any given 31-bit sequence prefix. To find the reseed positions in \(GambleSeq\), I scanned through \(GambleSeq\) looking at subsequences of length 31 and looking up their corresponding seed in the table. I would then check to see if the seed produced the correct sequence beyond the 31 element subsequence. If it didn’t, I assumed it was a false positive i.e. it wasn’t actually a reseed point. Unfortunately, this approach didn’t turn up the results I was hoping for. I wasn’t able to find points in the sequence where a seed produced a subsequence that extended beyond 31 elements.

When I couldn’t produce satisfactory results with this approach, I went back to the drawing board and tried to verify that all of my assumptions about how the number sequences were getting generated were correct.

Random Interference

The source code for the app was available, so I was able to download it and run it locally. I wanted to check my assumption that every executed gamble corresponded to 1 random number generation. This was the basic assumption that my first approach relied on i.e. that I was observing contiguous sequences of gamble results and therefore contiguous outputs of the random number generator. When running the app on my Macbook and simulating some gambles, I was able to verify that each gamble moved the global random generator forward exactly 1 generation. After running the app for a while and exercising various other behaviors, this would hold true. Since this didn’t seem to match what I observed against the real app, I tried to make sure I was replicating the exact environment of the deployed app.

The production app ran in a Linux based Docker container, so I ran the app locally inside the same Docker container configuration. This setup produced noticeably different behavior regarding the way the app generated random numbers. For every gamble execution, I would check to see if the RNG skipped a single generation. It appeared that, at a constant interval, about every 30 seconds or so, the RNG would skip forward, on average, 12-13 generations. This seemed odd. Since the app uses Go’s global random number generator, any other code that makes a call to it would affect the state of the generator. There weren’t that many Go packages outside the standard library that the gambling code utilized. After searching the Go codebase a bit, however, I did find a couple noticeable places that make calls to the global random number generator. One which seemed significant was in the Go DNS resolution logic. The following, condensed code snippet is a function inside net/dnsclient_unix.go:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

// exchange sends a query on the connection and hopes for a response.

func exchange(ctx context.Context,

server,

name string,

qtype uint16,

timeout time.Duration) (*dnsMsg, error) {

// ...

// OMITTED CODE

// ...

for _, network := range []string{"udp", "tcp"} {

// TODO(mdempsky): Refactor so defers from UDP-based

// exchanges happen before TCP-based exchange.

ctx, cancel := context.WithDeadline(ctx, time.Now().Add(timeout))

defer cancel()

c, err := d.dialDNS(ctx, network, server)

if err != nil {

return nil, err

}

defer c.Close()

if d, ok := ctx.Deadline(); ok && !d.IsZero() {

c.SetDeadline(d)

}

out.id = uint16(rand.Int()) ^ uint16(time.Now().UnixNano())

in, err := c.dnsRoundTrip(&out)

if err != nil {

return nil, mapErr(err)

}

if in.truncated { // see RFC 5966

continue

}

return in, nil

}

return nil, errors.New("no answer from DNS server")

}

On line 21 in the above code snippet, there is a call to the global rand function that is used to generate a random DNS packet id. This was promising, since it seemed like DNS resolution could be a hot section of code for anything that makes network requests. If this was portion of code was in fact a source of RNG interference in the production app, though, there were still two questions to answer:

- Why were these skips observed only when run on the Docker container, but not when testing locally on my Mac?

- What explained the generation skips occurring at regular 30 second intervals?

Answer 1: Platform Dependent DNS Resolution

My working hypothesis was that the DNS resolution code was injecting extra skips into the global number generator, but I wanted to produce a test that would validate this. It turns out that this test also served as a way to see why the app behavior differed between the Docker container and my Macbook. I came up with this simple Go script:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

package main

import "fmt"

import "net/http"

import "math/rand"

func main() {

var seed int64 = 42

rand.Seed(seed)

fmt.Printf("Seeded 'rand' with %d\n", seed)

fmt.Printf("Pre HTTP request: %d\n", rand.Int())

http.Get("http://google.com")

fmt.Printf("Post HTTP request: %d\n", rand.Int())

}

It seeds math/rand with an fixed, arbitrary seed, generates a single number, makes an HTTP request, and then generates a second number. In the Go documentation on DNS resolution, there is a note about the existence of two different DNS resolvers. You can optionally use a native C library DNS resolver, or a pure Go resolver, and this can be configured via the GODEBUG environment variable. This is the terminal output of the above script when run with the two different DNS resolvers:

➜ GODEBUG=netdns=cgo go run test_http.go

Seeded 'rand' with 42

Pre HTTP request: 3440579354231278675

Post HTTP request: 608747136543856411

➜ GODEBUG=netdns=go go run test_http.go

Seeded 'rand' with 42

Pre HTTP request: 3440579354231278675

Post HTTP request: 3534334367214237261

The outputs are different, but why? It becomes clear if we look at the output of a Go program that prints out the first 6 numbers of the random sequence generated with seed 42:

Generation 0: 3440579354231278675

Generation 1: 608747136543856411

Generation 2: 5571782338101878760

Generation 3: 1926012586526624009

Generation 4: 404153945743547657

Generation 5: 3534334367214237261

When using the cgo DNS resolver, the HTTP request doesn’t affect the random number generator, but when using the go resolver, the HTTP request skips the random number generator forward by 4 generations! This seemed like a very clear sign that the DNS resolver was the likely culprit for random generator interference. The Go documentation for the net package backed up this hypothesis:

The method for resolving domain names...varies by operating system.

On Unix systems, the resolver has two options for resolving names.

It can use a pure Go resolver that sends DNS requests directly to the servers

listed in /etc/resolv.conf, or it can use a cgo-based resolver that calls C

library routines such as getaddrinfo and getnameinfo.

By default the pure Go resolver is used ... the cgo-based resolver is used instead

under a variety of conditions: on systems that do not let programs make direct DNS

requests (OS X),...

This matched the behavior I observed. On my Macbook, if the C resolver was being used, it wouldn’t be calling any Go code, and so the global random number generator would be unaffected. On Linux, however, the DNS resolver would advance the state of the global number generator, affecting the random number output I observed. This served as an explanation for the random number generator interference that I observed when running the app in the Linux Docker container.

Answer 2: The mgo MongoDB Database Driver

After determining that the DNS resolver was the most likely cause for generation skips, I wanted to figure out why they were happening at apparently regular intervals. If the app is dormant i.e. no users are active, it seemed like there could be very few possible components that would be making network requests and therefore causing DNS lookups. One candidate, however, was the database driver. In order to persist various types of application data, the server, on startup, creates a connection to a MongoDB Atlas replica set, by means of the mgo driver. I wanted to see if the driver could potentially be executing requests on some background thread, even while there was no explicit user activity. I tried to monitor the network traffic while the app was running locally, and I perused the mgo source code a bit keeping in mind the 30 second interval time. This bit of logic, in mgo/cluster.go seemed like a good candidate:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

// How long to wait for a checkup of the cluster topology if nothing

// else kicks a synchronization before that.

const syncServersDelay = 30 * time.Second

const syncShortDelay = 500 * time.Millisecond

// syncServersLoop loops while the cluster is alive to keep its idea of

// the server topology up-to-date. It must be called just once from

// newCluster. The loop iterates once syncServersDelay has passed, or

// if somebody injects a value into the cluster.sync channel to force a

// synchronization. A loop iteration will contact all servers in

// parallel, ask them about known peers and their own role within the

// cluster, and then attempt to do the same with all the peers

// retrieved.

func (cluster *mongoCluster) syncServersLoop() {

...

...

...

}

This status checking logic runs once every 30 seconds. This fit with the interval of generation skips, and also with the number of skips I was seeing, which was around 12 on average. An HTTP request skips the random number generator forward 4 iterations, and if the mgo driver was connecting to a 3-node replica set for a status check, that would fit with 4 generations * 3 connections = 12 generations per status check. I also verified this behavior by observing the app’s network traffic locally. It was clear that it would try to make connections to MongoDB cluster members periodically.

This was a satisfactory explanation for the random interference I was observing in the RNG output. Now I just needed to apply my findings to a new approach for cracking the gambling system.

Bringing it All Together

I now had what seemed like a complete understanding of how the random number sequences I observed were being generated. My model was as follows:

I could observe partial contiguous runs of random numbers, but there would be skips interspersed, in addition to potential reseed points. I had to take this into account when trying to predict future outputs.

Subsequence Fingerprints

The core of my approach still involved finding the latest reseed point and its seed value. Instead of looking for prefixes of generated sequences, though, I figured that if I could observe a contiguous subsequence of considerable length (e.g. ~64 elements), this could also act as a fingerprint for a random sequence, even if it wasn’t the prefix. My thought was that it would be very unlikely for two different random sequences (of some reasonable finite length) to contain exactly the same 64-element subsequence. There are \(2^{64}\) possible binary sequences of length 64, but, in a random binary sequence of say, length 5000, there are only around 5000 different subsequences that appear (think of sliding a 64-element window along the 5000 element sequence). So, in any one length \(N\) sequence you only see around \(N\) of the \(2^{64}\) possible length 64 subsequences. This means the probability of a specific length 64 subsequence appearing in a length \(N\) sequence should be extremely low (\(\approx \frac{N}{2^{64}}\)), as long as \(N << 2^{64}\).

Building an Autogambler Bot

To figure out the latest seed, we can feed a large number of gambles into the system to ensure that we observe a contiguous output sequence i.e. there are no generation skips. If we are able to predict, roughly, when the server was last restarted, we can estimate how many generations have passed in total due to the periodic status checks. We can then have a Go script that generates random sequences of at least that length, and we can search for the observed subsequence in the sequence generated by each seed. If we find the observed subsequence in a sequence, then we should have our seed.

There is something important to note here. Early on, I noticed that the app actually let you execute gambles with a wager of 0 tokens. It tells you the result of the gamble coin toss, but no money is ever gained or lost. This was one of the original discoveries that inspired my investigation into the random number generator. This could, arguably, be considered a bug, but it’s something that doesn’t really hurt or help malicious users. In this case, due to the app’s weak random number generator, it allowed for information leakage, but, with a cryptographically strong number generator, this would be a non-issue. This subtle behavior, though, allowed me to glean helpful information about the internal number generator state.

Once we have determined the seed we can start gambling. Since we know that any continuous runs of the random number generator will be periodically interrupted, we take this into account when coming up with a gambling strategy. Basically, the strategy is the following:

- Feed a small number of gambles into the system as fast as possible, so as to produce a run of gambles, call it \(Primer\), with (hopefully) no random number generator skips.

- Since we know the full random number sequence (because we found the seed), use the sequence \(Primer\) to locate ourselves in the sequence. It should be easy to approximate where in the sequence we are since we can estimate how long it’s been since the server has started.

- Once we determine the current RNG generation, we start gambling, using each next output of the random number sequence as our prediction. We gamble continuously until the first loss, at whatever desired rate of risk. Once we lose, we assume that a periodic status check interfered with our continuous generation run.

- Wait a small period of time for the status check to finish. Then go back to step 1 and repeat.

This strategy, even with occasional losses, or unexpected blips, was ultimately successful, as evidenced my final Mongobucks balance, that well exceeded the billion token mark:

Concluding Thoughts

For me, this was an interesting exploration of Go’s random number generator and some of its unexpected behaviors. Default random number generators should always be considered insecure, but I wanted to see what it would actually take to beat one that uses a non-trivial algorithm. It turned out to be a good exercise in programming, debugging, and penetration testing. For those interested, there is a somewhat unstructured collection of code that I used throughout this process located here. They are mostly one-off scripts and probably can’t be used for anything general purpose, but they represent a dump of the tools I used during this hacking process. Also, you can view the original Mongobucks app source code here.